2022

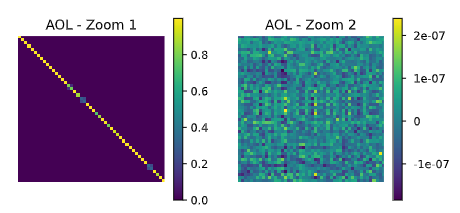

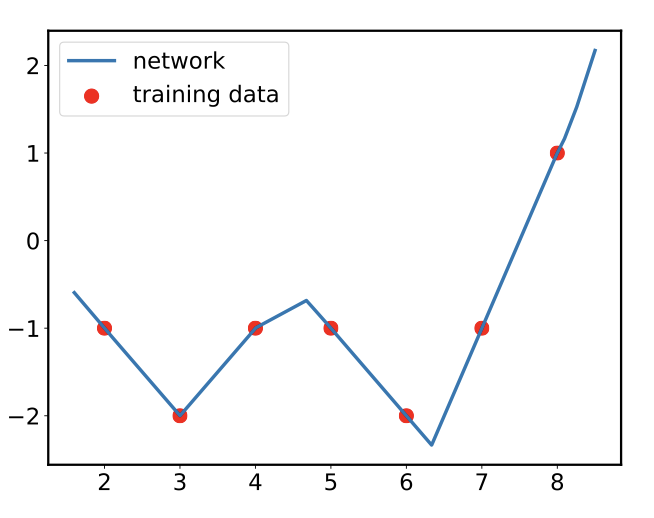

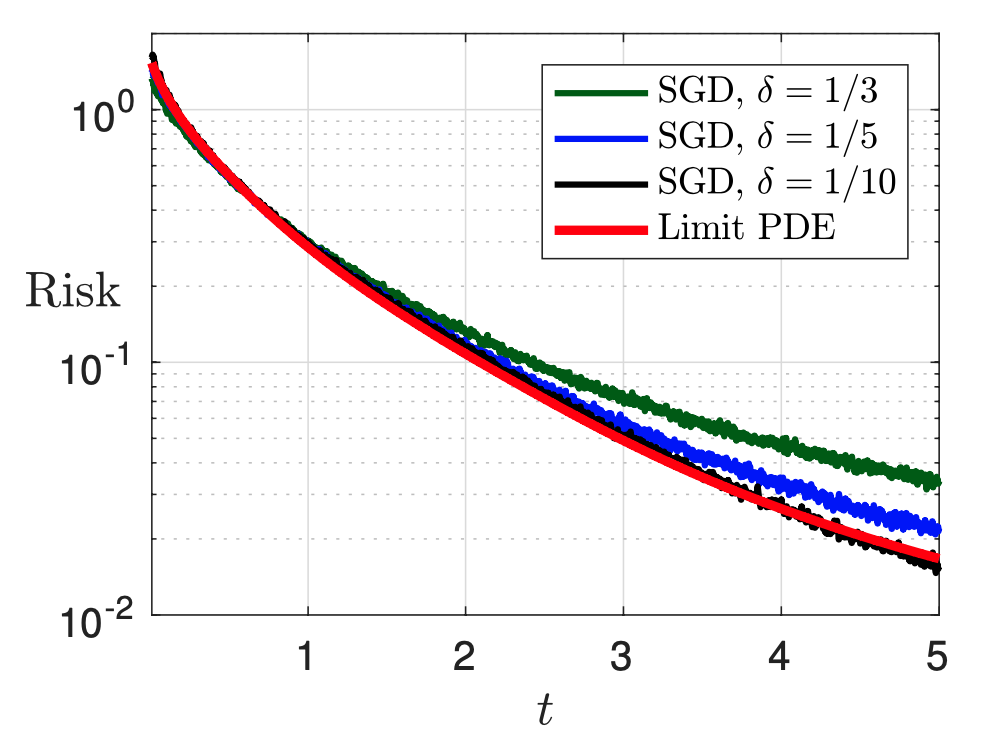

N. Konstantinov, C. H. Lampert. Fairness-Aware PAC Learning from Corrupted Data. JMLR 23 (2022) 1-60 |  B. Prach, C. H. Lampert. Almost-Orthogonal Layers for Efficient General-Purpose Lipschitz Networks. ECCV 2022 |  M. Mondelli, and R. Venkataramanan, “Approximate Message Passing with Spectral Initialization for Generalized Linear Models”,STAT 2022 |  A. Shevchenko, V. Kungurtsev, and M. Mondelli, “Mean-field Analysis of Piecewise Linear Solutions for Wide ReLU Networks”, JMLR 2022 |

| Paper | Paper | Paper | Paper |

| | Project |

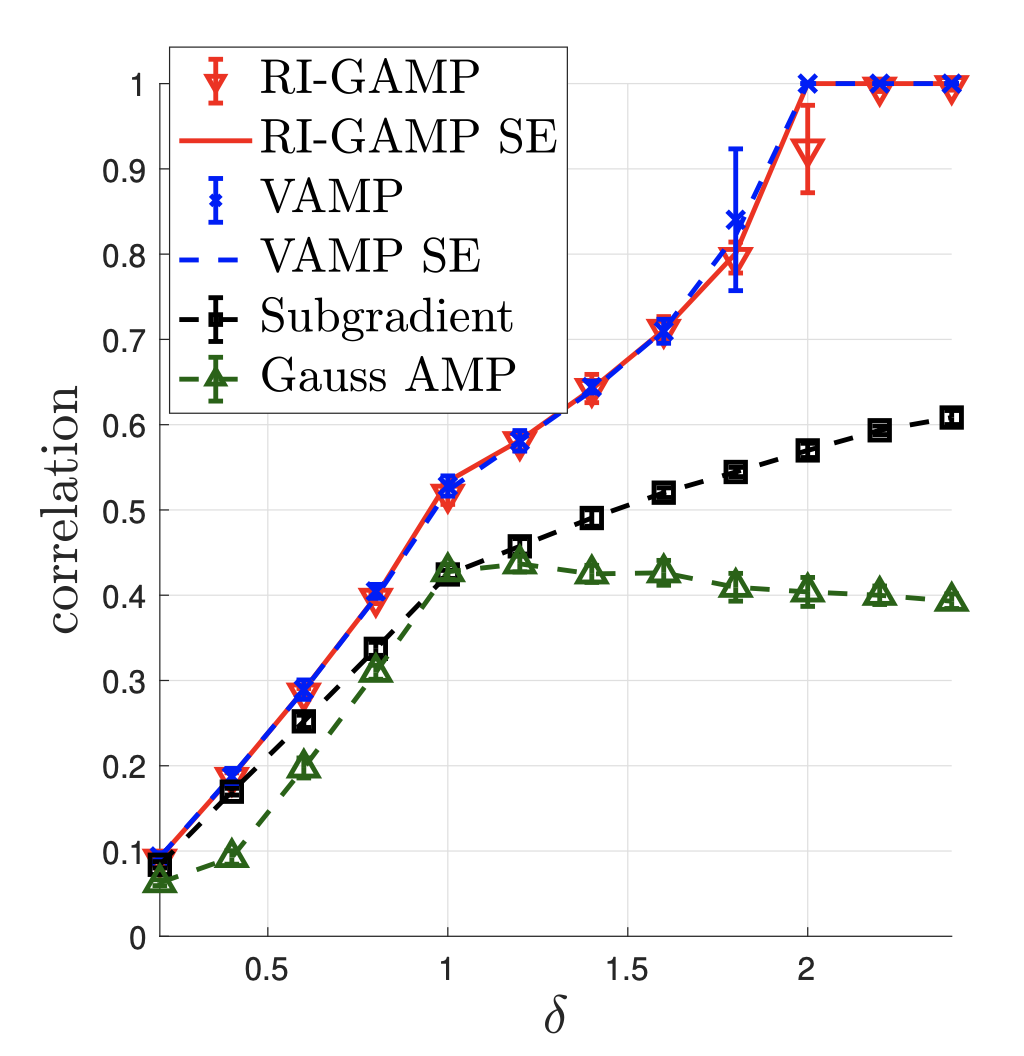

R. Venkataramanan, K. Kögler, and M. Mondelli, “Estimation in Rotationally Invariant Generalized Linear Models via Approximate Message Passing”, ICML 2022 |  D. Fathollahi and M. Mondelli, “Polar Coded Computing: The Role of the Scaling Exponent”, ISIT 2022 | ||

| Paper | Paper | ||

2021

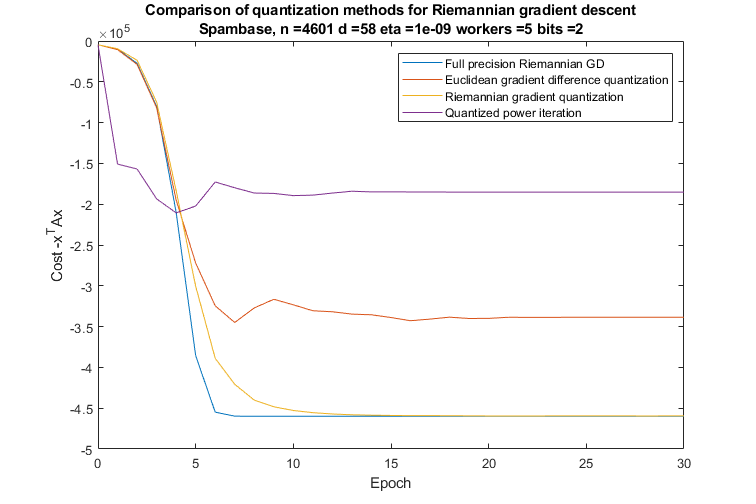

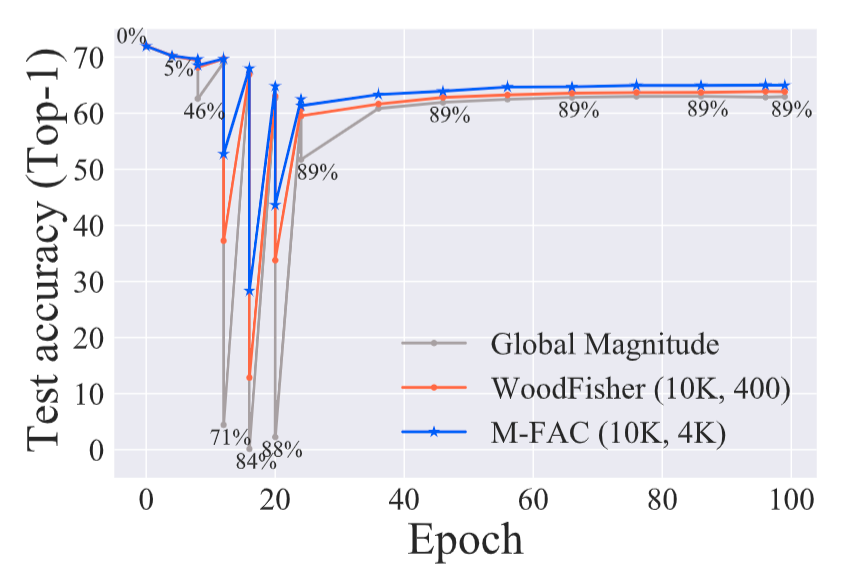

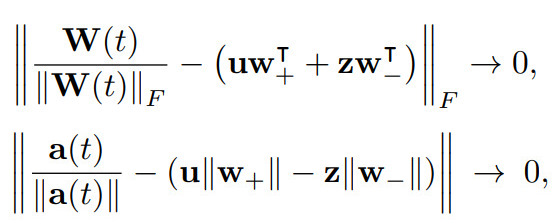

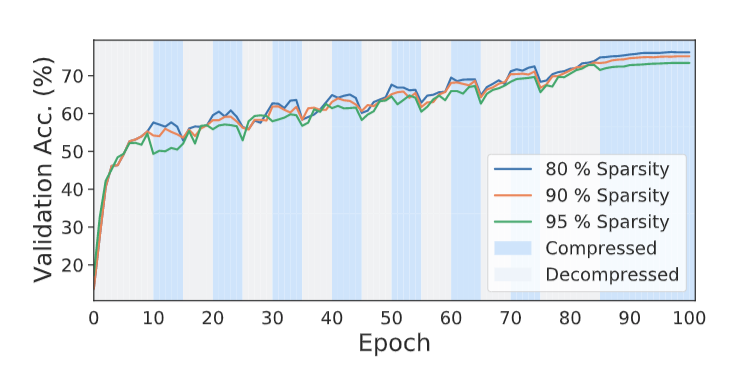

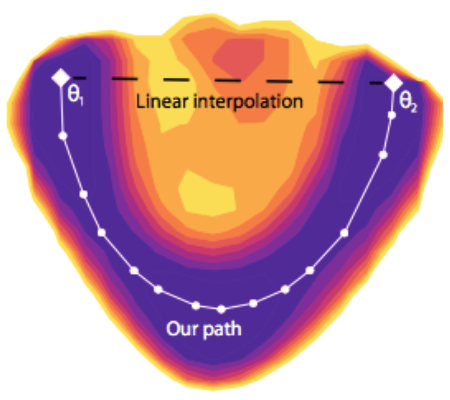

AC/DC: ALTERNATING COMPRESSED/DECOMPRESSED TRAINING OF DEEP NEURAL NETWORKS NeurIPS 2021 Peste, Iofinova, Vladu, Alistarh |  DISTRIBUTED PRINCIPAL COMPONENT ANALYSIS WITH LIMITED COMMUNICATION DISTRIBUTED PRINCIPAL COMPONENT ANALYSIS WITH LIMITED COMMUNICATIONNeurIPS 2021 Alimisis, Davies, Vandereycken, Alistarh |  WHEN ARE SOLUTIONS CONNECTED IN DEEP NETWORKS? NeurIPS 2021 Nguyen Bréchet Mondelli |  M-FAC: EFFICIENT MATRIX-FREE APPROXIMATIONS OF SECOND-ORDER INFORMATION NeurIPS 2021 Frantar Kurtic Alistarh |

| Project Paper | Project Paper | Project Paper | |

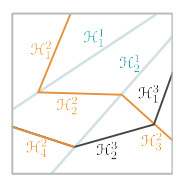

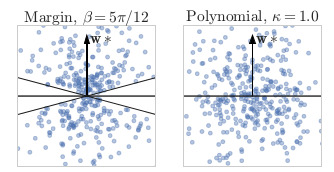

The Inductive Bias of RELU networks on orthogonally separable Data The Inductive Bias of RELU networks on orthogonally separable DataICLR 2021 Phuong, Lampert |  BYZANTINE-RESILIENT NON-CONVEX STOCHASTIC GRADIENT DESCENT BYZANTINE-RESILIENT NON-CONVEX STOCHASTIC GRADIENT DESCENTICLR 2021 Allen-Zhu, Ebrahimian, Li, Alistarh |  TOWARDS TIGHT COMMUNICATION LOWER BOUNDS FOR DISTRIBUTED OPTIMIZATION TOWARDS TIGHT COMMUNICATION LOWER BOUNDS FOR DISTRIBUTED OPTIMIZATIONNeurIPS 2021 Korhonen Alistarh |  FULLY-ASYNCHRONOUS DECENTRALIZED SGD WITH QUANTIZED AND LOCAL UPDATES FULLY-ASYNCHRONOUS DECENTRALIZED SGD WITH QUANTIZED AND LOCAL UPDATESNeurIPS 2021 Nadiradze, Sabour, Davies, Li, Alistarh |

| Project Paper | Project Paper | Project Paper | Project Paper |

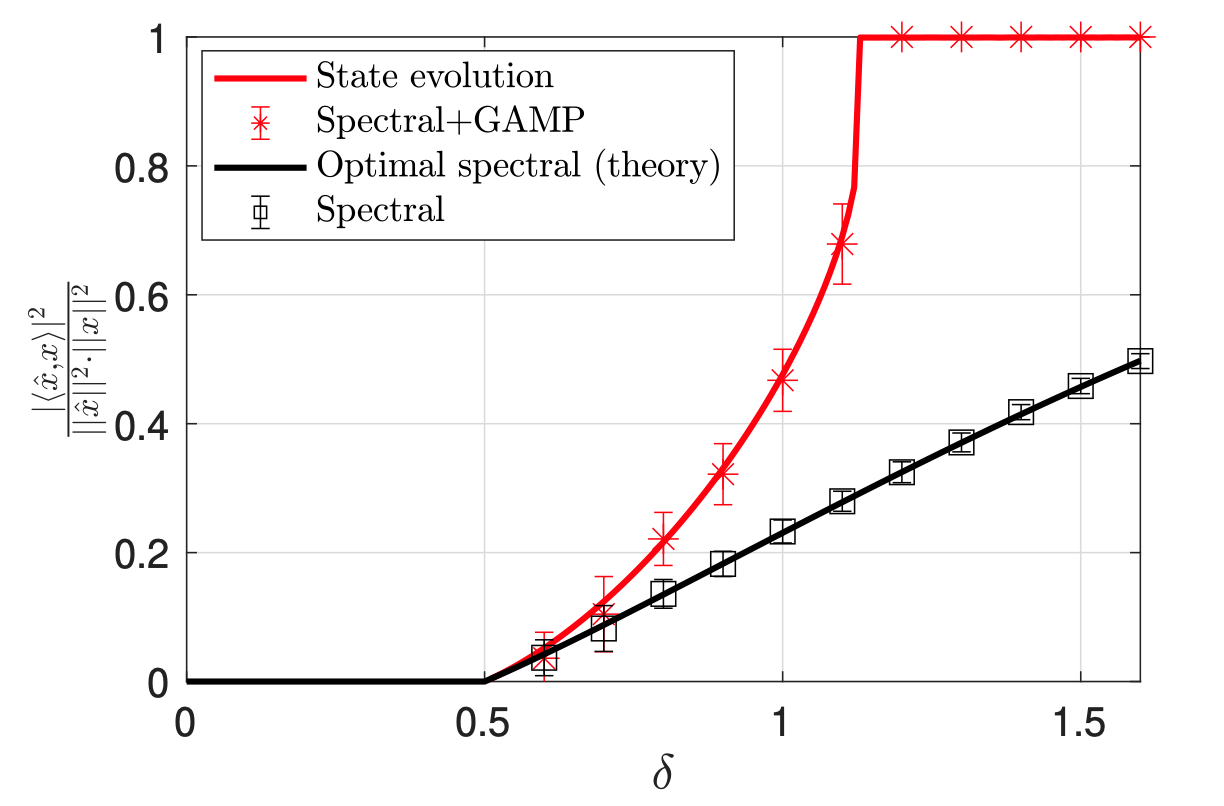

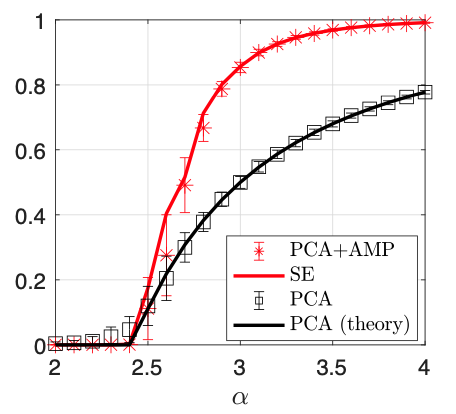

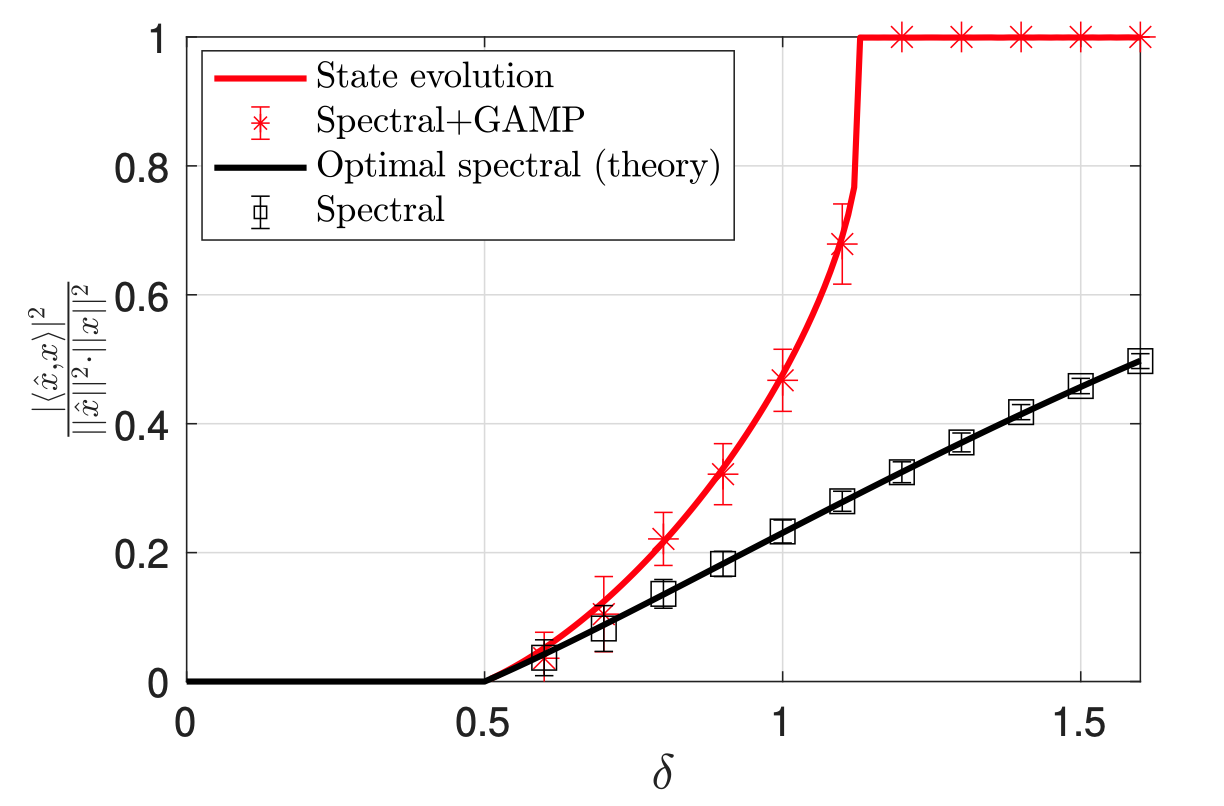

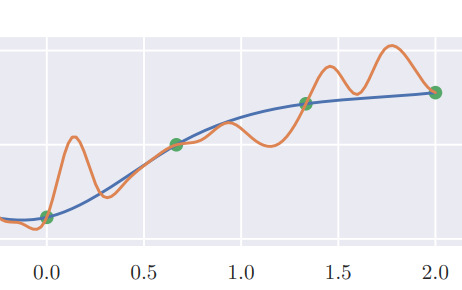

PCA INITIALIZATION FOR APPROXIMATE MESSAGE PASSING IN ROTATIONALLY INVARIANT MODELS PCA INITIALIZATION FOR APPROXIMATE MESSAGE PASSING IN ROTATIONALLY INVARIANT MODELSNeurIPS 2021 Mondelli, Venkataramanan |  APPROXIMATE MESSAGE PASSING WITH SPECTRAL INITIALIZATION FOR GENERALIZED LINEAR MODELS APPROXIMATE MESSAGE PASSING WITH SPECTRAL INITIALIZATION FOR GENERALIZED LINEAR MODELSAISTATS 2021 Mondelli, Venkataramanan |  TIGHT BOUNDS ON THE SMALLEST EIGENVALUE OF THE NEURAL TANGENT KERNEL FOR DEEP RELU NETWORKS ICML 2021 Nguyen, Mondelli, Montufar |  ONE-SIDED FRANK-WOLFE ALGORITHMS FOR SADDLE PROBLEMS ICML 2021 Kolmogorov, Pock |

| Paper | Paper | Paper | Paper |

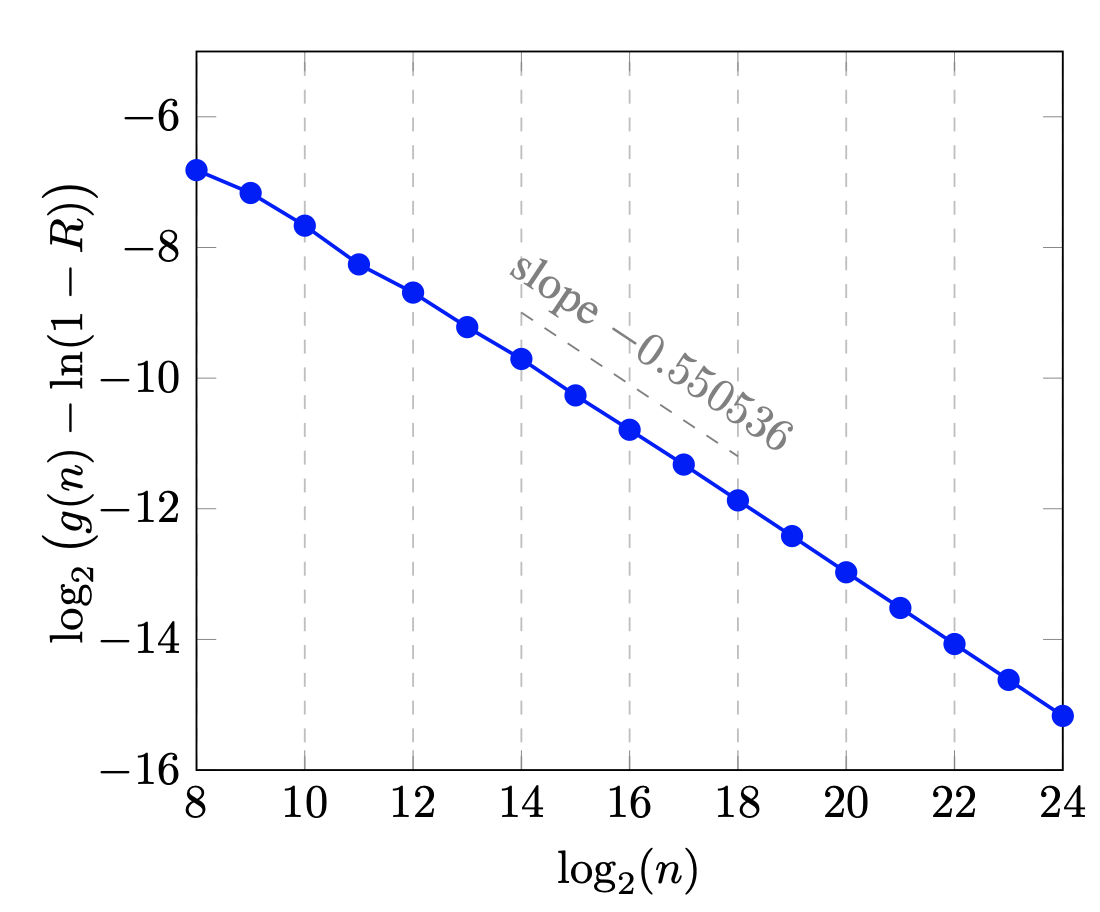

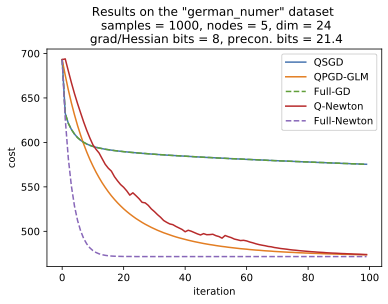

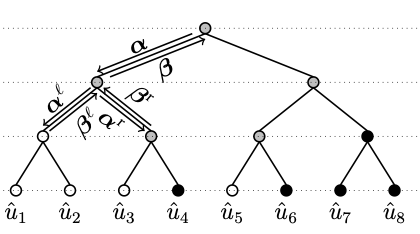

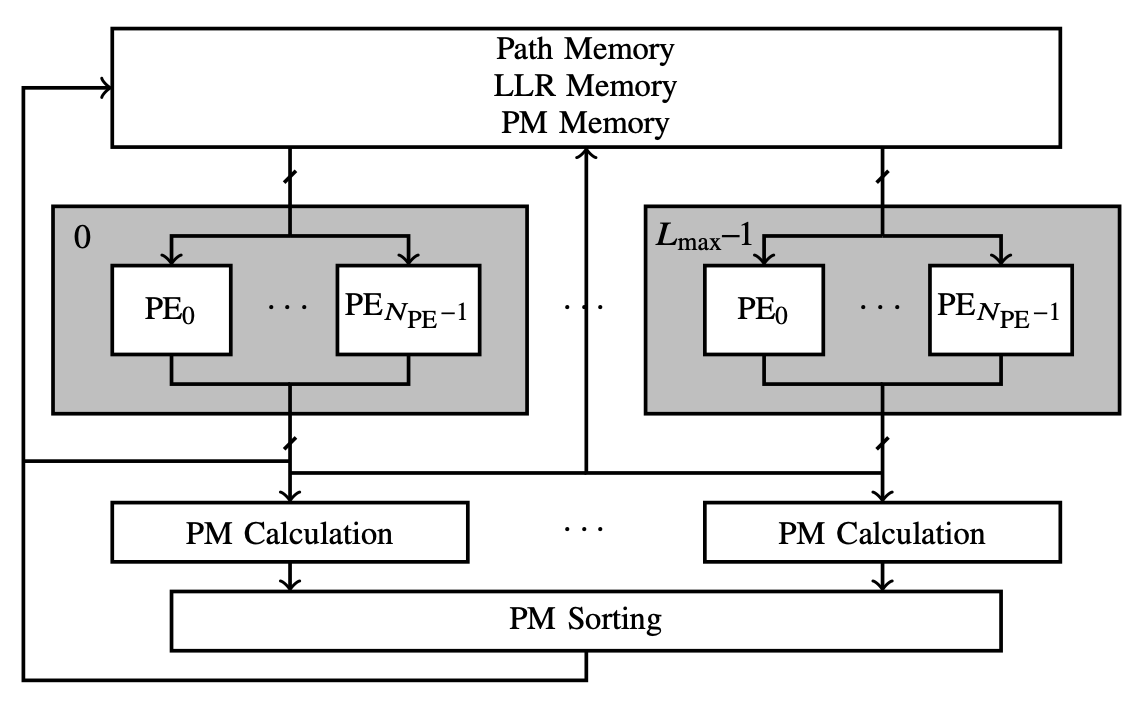

COMMUNICATION-EFFICIENT DISTRIBUTED OPTIMIZATION WITH QUANTIZED PRECONDITIONERS COMMUNICATION-EFFICIENT DISTRIBUTED OPTIMIZATION WITH QUANTIZED PRECONDITIONERSICML 2021 Alimisis, Davies, Alistarh |  GENOMIC ARCHITECTURE AND PREDICTION OF CENSORED TIME-TO-EVENT PHENOTYPES WITH A BAYESIAN GENOME-WIDE ANALYSIS Nature Communications Ojavee, Robinson |  PARALLELISM VERSUS LATENCY IN SIMPLIFIED SUCCESSIVE-CANCELLATION DECODING OF POLAR CODES ISIT 2021 Hashemi, Mondelli, Fazeli, Vardy, Cioffi, Goldsmith |  SPARSE MULTI-DECODER RECURSIVE PROJECTION AGGREGATION FOR REED-MULLER CODES SPARSE MULTI-DECODER RECURSIVE PROJECTION AGGREGATION FOR REED-MULLER CODESISIT 2021 Fathollahi, Farsad, Hashemi, Mondelli |

| Project Paper | Project Paper | Paper | Paper |

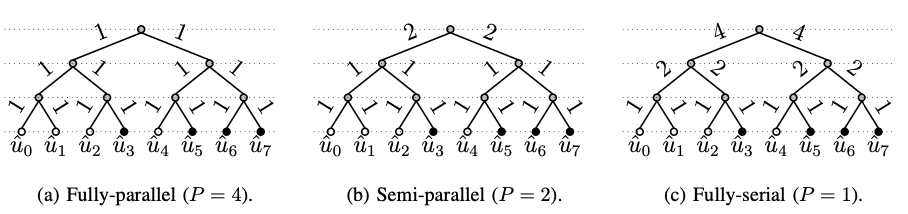

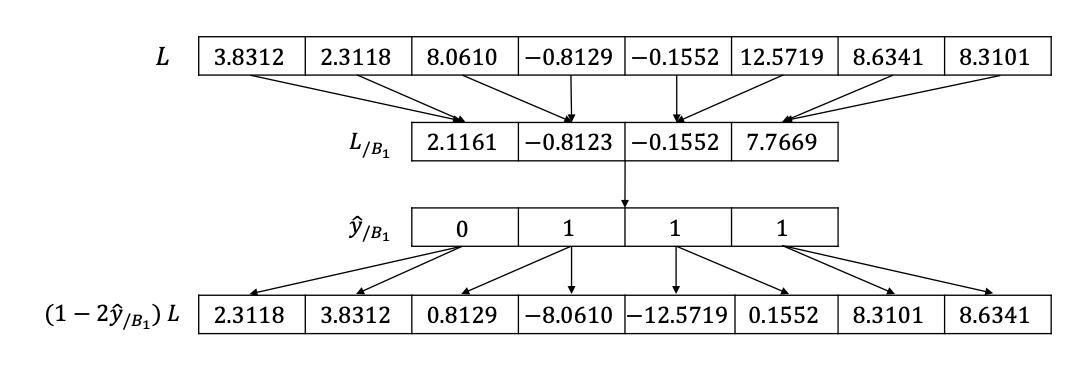

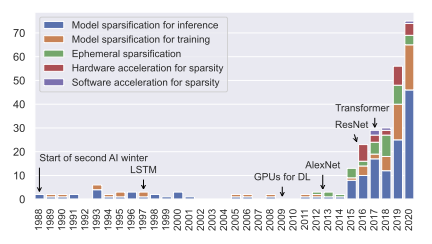

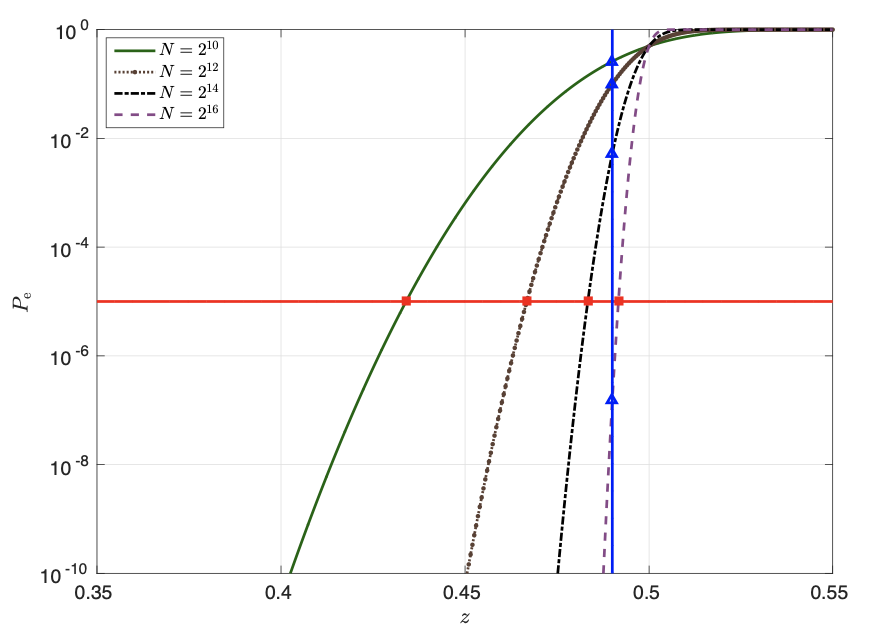

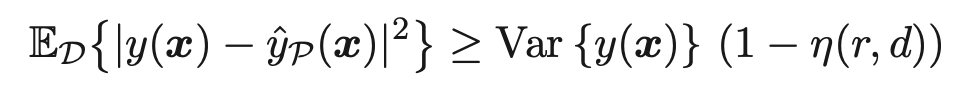

NEW BOUNDS FOR DISTRIBUTED MEAN ESTIMATION AND VARIANCE REDUCTION NEW BOUNDS FOR DISTRIBUTED MEAN ESTIMATION AND VARIANCE REDUCTIONICLR 2021 Davies, Gurunanthan, Moshrefi, Ashkboos, Alistarh Project Paper  OPTIMAL COMBINATION OF LINEAR AND SPECTRAL ESTIMATORS FOR GENERALIZED LINEAR MODELS OPTIMAL COMBINATION OF LINEAR AND SPECTRAL ESTIMATORS FOR GENERALIZED LINEAR MODELSFoundations of Computational Mathematics Mondelli, Thrampoulidis Venkataramanan Paper |  ELASTIC CONSISTENCY: A PRACTICAL CONSISTENCY MODEL FOR DISTRIBUTED STOCHASTIC GRADIENT DESCENT ELASTIC CONSISTENCY: A PRACTICAL CONSISTENCY MODEL FOR DISTRIBUTED STOCHASTIC GRADIENT DESCENTAAAI 2021 Nadiradze, Markov, Chatterjee, Kungurtsev, Alistarh Project Paper |  SPARSITY IN DEEP LEARNING: PRUNING AND GROWTH FOR EFFICIENT INFERENCE AND TRAINING IN NEURAL NETWORKS SPARSITY IN DEEP LEARNING: PRUNING AND GROWTH FOR EFFICIENT INFERENCE AND TRAINING IN NEURAL NETWORKSJMLR Hoefler, Alistarh, Ben-Nun, Dryden, Peste Project Paper |  SUBLINEAR LATENCY FOR SIMPLIFIED SUCCESSIVE CANCELLATION DECODING OF POLAR CODES SUBLINEAR LATENCY FOR SIMPLIFIED SUCCESSIVE CANCELLATION DECODING OF POLAR CODESIEEE Transactions on Wireless Communications Mondelli, Hashemi, Cioffi, Goldsmith Paper |

2020

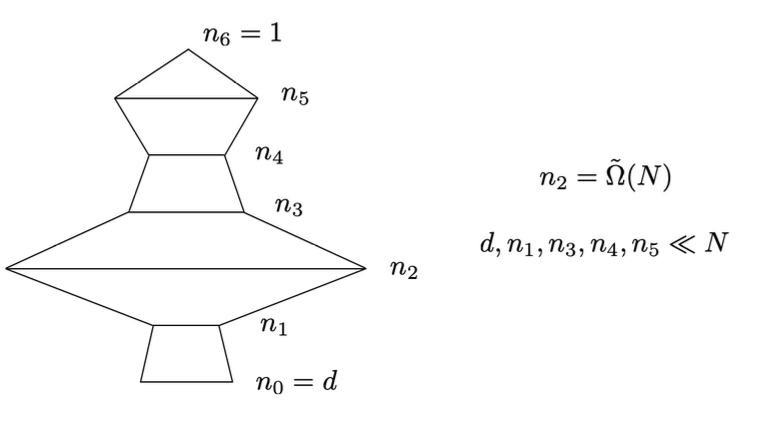

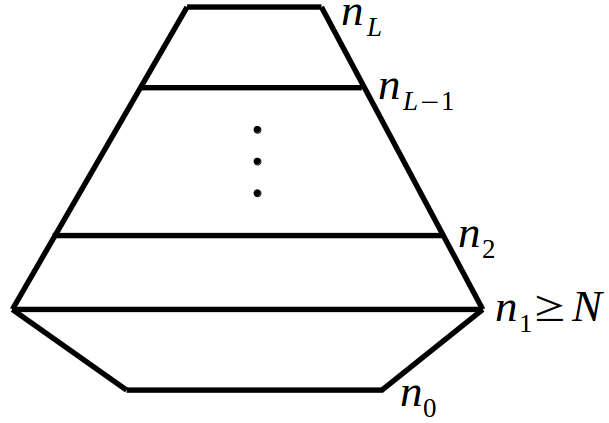

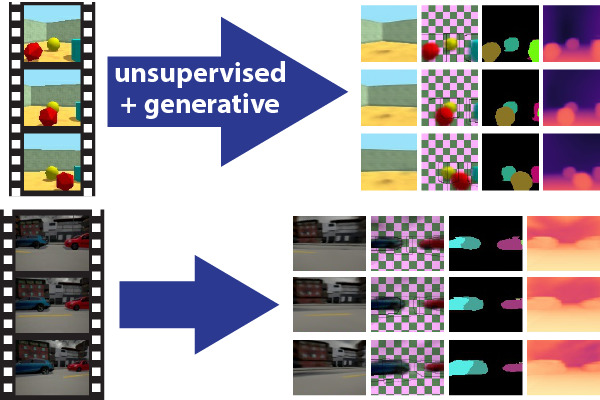

GLOBAL CONVERGENCE OF DEEP NETWORKS WITH ONE WIDE LAYER FOLLOWED BY PYRAMIDAL TOPOLOGY GLOBAL CONVERGENCE OF DEEP NETWORKS WITH ONE WIDE LAYER FOLLOWED BY PYRAMIDAL TOPOLOGYNeurIPS 2020 Nguyen, Mondelli Paper |  WOODFISHER: EFFICIENT SECOND-ORDER APPROXIMATION FOR NEURAL NETWORK COMPRESSION WOODFISHER: EFFICIENT SECOND-ORDER APPROXIMATION FOR NEURAL NETWORK COMPRESSIONNeurIPS 2020 Singh, Alistarh Project Paper |  RELAXED SCHEDULING FOR SCALABLE BELIEF PROPAGATION RELAXED SCHEDULING FOR SCALABLE BELIEF PROPAGATIONNeurIPS 2020 Aksenov, Alistarh Project Paper |  UNSUPERVISED OBJECT-CENTRIC VIDEO GENERATION AND DECOMPOSITION IN 3D UNSUPERVISED OBJECT-CENTRIC VIDEO GENERATION AND DECOMPOSITION IN 3DNeurIPS 2020 Henderson, Lampert Project Paper |

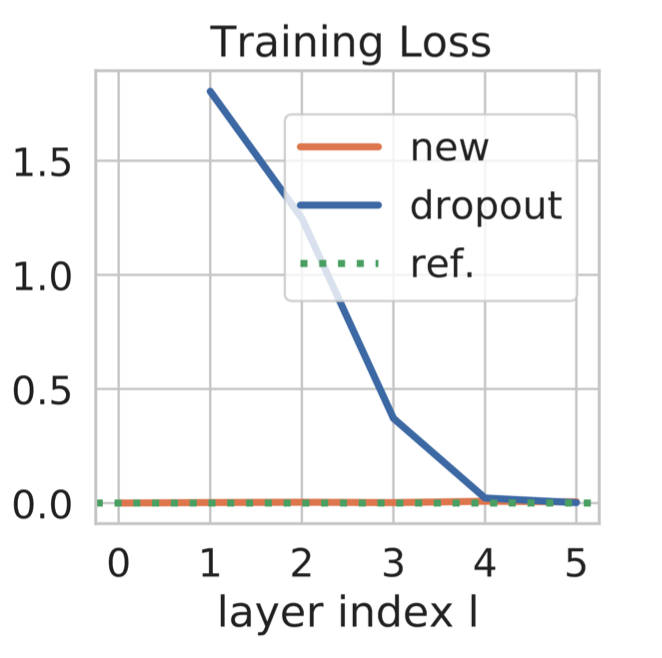

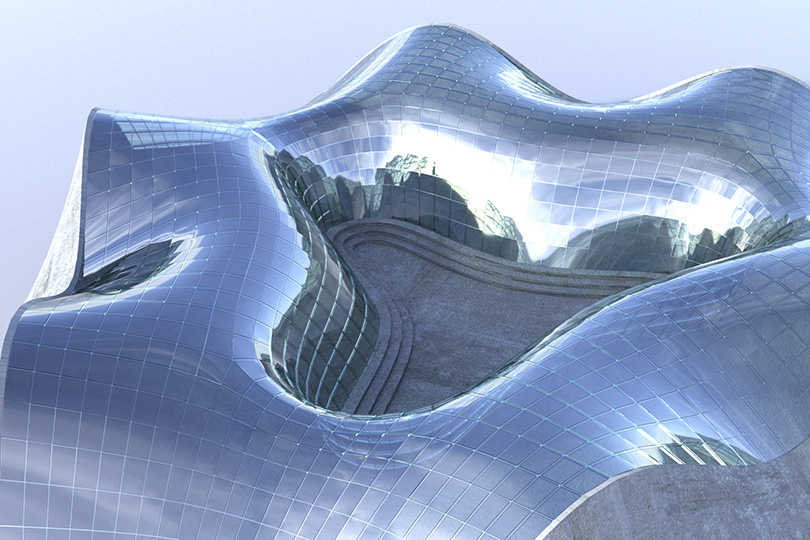

COMPUTATIONAL DESIGN OF COLD BENT GLASS FAÇADES COMPUTATIONAL DESIGN OF COLD BENT GLASS FAÇADESACM Transactions on Graphics 39(6) (SIGGRAPH Asia 2020) Gavriil, Guseinov, Pérez, Pellis Henderson, Rist, Pottmann, Bickel Paper |  BINARY LINEAR CODES WITH OPTIMAL SCALING: POLAR CODES WITH LARGE KERNELS BINARY LINEAR CODES WITH OPTIMAL SCALING: POLAR CODES WITH LARGE KERNELSIEEE Transactions on Information Theory Fazeli, Hassani, Mondelli, Vardy Paper |  DOES SGD IMPLICITLY OPTIMIZE FOR SMOOTHNESS? GCPR 2020 Volhejn, Lampert Paper |  LANDSCAPE CONNECTIVITY AND DROPOUT STABILITY OF SGD SOLUTIONS FOR OVER-PARAMETERIZED NEURAL NETWORKS LANDSCAPE CONNECTIVITY AND DROPOUT STABILITY OF SGD SOLUTIONS FOR OVER-PARAMETERIZED NEURAL NETWORKSICML 2020 Shevchenko, Mondelli Paper |

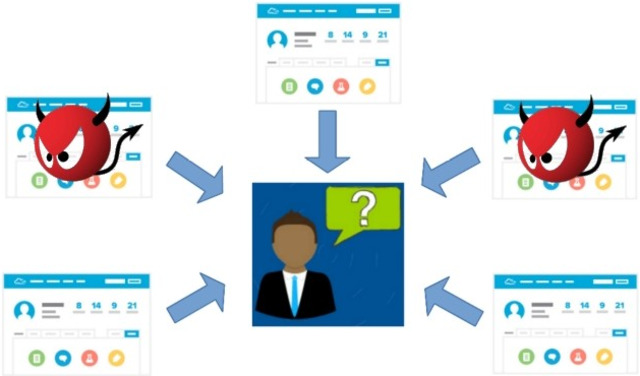

ON THE SAMPLE COMPLEXITY OF ADVERSARIAL MULTI-SOURCE PAC LEARNING ICML 2020 Konstantinov, Frantar, Alistarh, Lampert Paper |  PROBABILISTIC INFERENCE OF THE GENETIC ARCHITECTURE OF FUNCTIONAL ENRICHMENT OF COMPLEX TRAITS PROBABILISTIC INFERENCE OF THE GENETIC ARCHITECTURE OF FUNCTIONAL ENRICHMENT OF COMPLEX TRAITSmedRxiv Patxot, Robinson Project Paper |  BAYESIAN REASSESSMENT OF THE EPIGENETIC ARCHITECTURE OF COMPLEX TRAITS BAYESIAN REASSESSMENT OF THE EPIGENETIC ARCHITECTURE OF COMPLEX TRAITSNature Communications Trejo Banos, Robinson Paper |  FUNCTIONAL VS. PARAMETRIC EQUIVALENCE OF RELU NETWORKS FUNCTIONAL VS. PARAMETRIC EQUIVALENCE OF RELU NETWORKSICLR 2020 Phuong, Lampert Paper |

LOCALIZING GROUPED INSTANCES FOR EFFICIENT DETECTION IN LOW-RESOURCE SCENARIOS LOCALIZING GROUPED INSTANCES FOR EFFICIENT DETECTION IN LOW-RESOURCE SCENARIOSWACV 2020 Royer, Lampert Project Paper |  ANALYSIS OF A TWO-LAYER NEURAL NETWORK VIA DISPLACEMENT CONVEXITY ANALYSIS OF A TWO-LAYER NEURAL NETWORK VIA DISPLACEMENT CONVEXITYAnnals of Statistics Javanmard, Mondelli, Montanari Paper |  A FLEXIBLE SELECTION SCHEME FOR MINIMUM-EFFORT TRANSFER LEARNING A FLEXIBLE SELECTION SCHEME FOR MINIMUM-EFFORT TRANSFER LEARNINGWACV 2020 Royer, Lampert Project Paper | |

| 2019 | |||

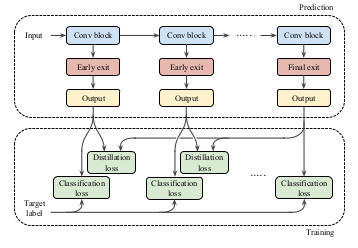

RATE-FLEXIBLE FAST POLAR DECODERS RATE-FLEXIBLE FAST POLAR DECODERSIEEE Transactions on Signal Processing Hashemi, Condo, Mondelli, Gross Paper |  DISTILLATION-BASED TRAINING FOR MULTI-EXIT ARCHITECTURES DISTILLATION-BASED TRAINING FOR MULTI-EXIT ARCHITECTURESICCV 2019 Phuong, Lampert Paper |  KS(CONF): A LIGHT-WEIGHT TEST IF A MULTICLASS CLASSIFIER OPERATES OUTSIDE OF ITS SPECIFICATIONS KS(CONF): A LIGHT-WEIGHT TEST IF A MULTICLASS CLASSIFIER OPERATES OUTSIDE OF ITS SPECIFICATIONSIJCV Sun, Lampert Project Paper |  FUNCTION NORMS FOR NEURAL NETWORKS FUNCTION NORMS FOR NEURAL NETWORKSWorkshop on Statistical Deep Learning in Computer Vision at ICCV 2019 Triki, Berman, Kolmogorov, Blaschko Paper |

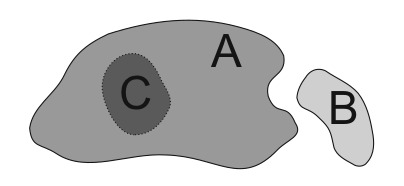

TESTING THE COMPLEXITY OF A VALUED CSP LANGUAGE ICALP 2019 Kolmogorov Project Paper |  TOWARDS UNDERSTANDING KNOWLEDGE DISTILLATION TOWARDS UNDERSTANDING KNOWLEDGE DISTILLATIONICML 2019 Phuong, Lampert Paper |  ROBUST LEARNING FROM UNTRUSTED SOURCES ROBUST LEARNING FROM UNTRUSTED SOURCESICML 2019 Konstantinov, Lampert Project Paper |  MAP INFERENCE VIA BLOCK-COORDINATE FRANK-WOLFE ALGORITHM MAP INFERENCE VIA BLOCK-COORDINATE FRANK-WOLFE ALGORITHMCVPR 2019 Swoboda, Kolmogorov Project Paper |

ON THE CONNECTION BETWEEN LEARNING TWO-LAYERS NEURAL NETWORKS AND TENSOR DECOMPOSITION AISTATS 2019 Mondelli, Montanari Paper |